How we use AI to speed up manual penetration testing at Contentstack

Share

TL;DR

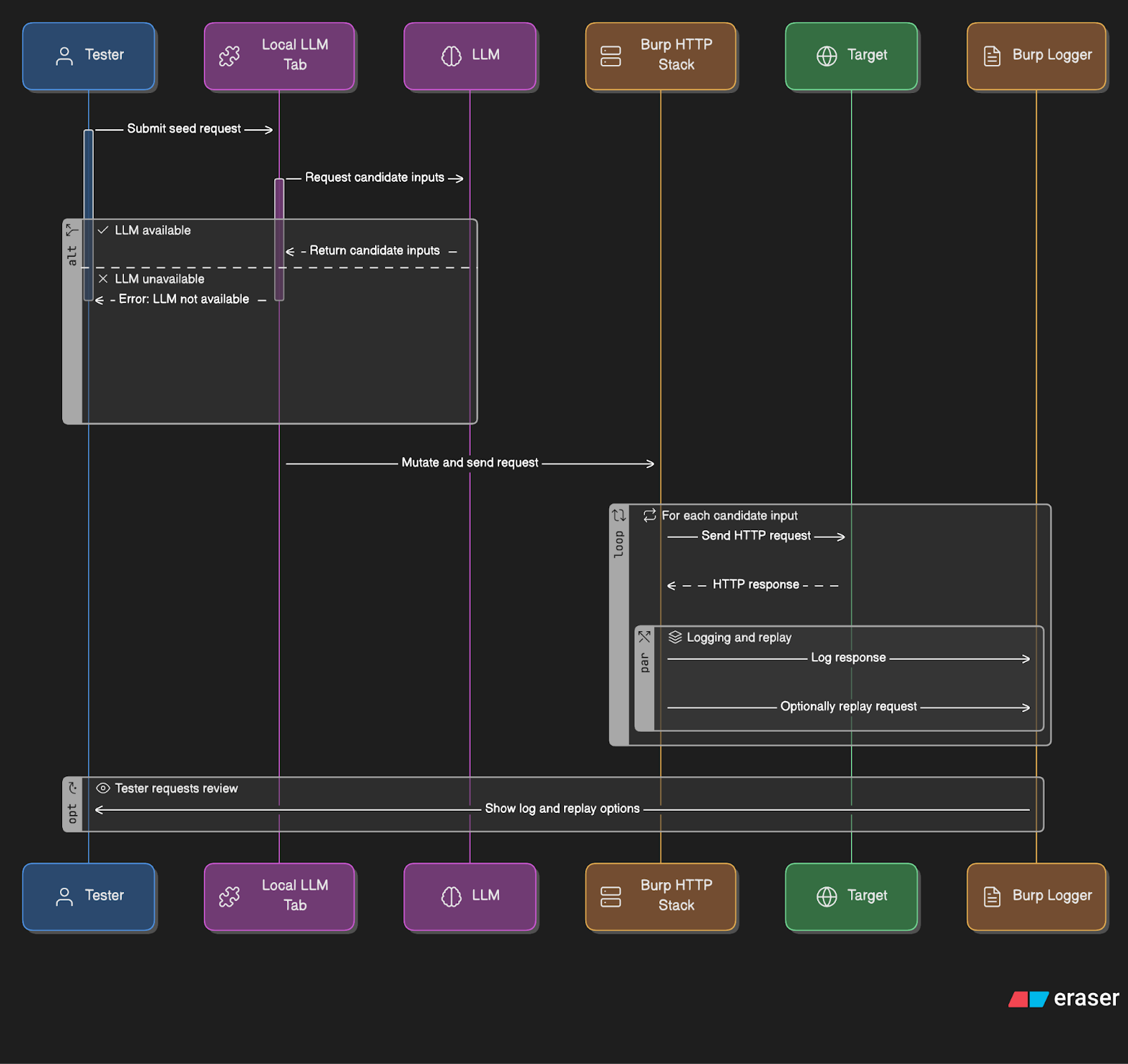

The Contentstack Security Team developed a small Burp Suite extension that lets any Burp request talk to a local LLM (or via MCP clients such as Claude Desktop). The extension proposes candidate inputs, mutates the current request and sends variants through BurpSuite so traffic, auth, scope and logging remain controlled.

Result: Our “think → craft → send → compare” loop on staging has dropped from minutes to seconds.

Contentstack is more than just a headless CMS today. It’s a composable DXP with Marketplace, Automation Hub, Brand Kit, Launch, Personalization and Lytics. That composability is powerful for builders, but it expands the attack surface.

Automation catches many classes of issues, but the most interesting bugs still show up when a human tests with domain context: auth edges, tenant boundaries, unusual encodings and quirky parsers. That’s why manual testing remains central to our security reviews.

Over one week, we streamlined one of the slowest manual loops — “think → craft input → send → compare” — by wiring a small AI helper into BurpSuite. This post describes the helper, how we use it and how it fits our process.

Why we built this

Manual payload generation and iteration are time-consuming. The process generally requires an engineer to:

- Identify parameters (URL, body, JSON, cookie)

- Try a handful of benign detection inputs

- Send them one by one and repeat with alternate encodings

- Move promising results into Repeater and dig deeper

Credit-metered cloud AI features are useful, but not always practical for continuous fuzzing on internal environments. We wanted a human-in-the-loop assistant that:

- Lives inside Burp’s workflow and logger

- Runs locally or via an org-approved MCP client

- Never sends anything without an explicit tester action

What we built

Local LLM Assistant (open source, Montoya API) — a Burp extension that adds a “Local LLM” tab and a context-menu action.

Key features:

- Seed from Burp: Right-click any request → “Use this request as seed.”

- 2 Generation Modes:

- Command mode: Leave the prompt blank, select vuln family (SQL/NoSQL/XSS/etc.), location (URL/BODY/JSON/COOKIE) and count. The model proposes candidate inputs.

- Prompt mode: Write a short instruction; the model returns a structured list.

- Fire through Burp: The extension mutates the seeded request and sends variants in parallel through Burp’s HTTP stack (optionally adds each to Repeater).

- Encoding variants: Optionally send both normal and encoded versions (URL/Base64/HTML).

- Timing/observability: Logs generation time, send time and wall-clock total, so that we can see how the time is spent.

- MCP bridge: A tiny HTTP bridge that lets MCP clients (e.g., Claude Desktop, VS Code MCP) fetch the seed, propose inputs and callback to send — without requiring a local model download.

IMPORTANT: All processes run on authorized staging targets. A tester always selects the seed and clicks Send. All traffic remains in Burp.

Architecture at a glance

- Burp stays in the control plane: the extension mutates the selected seed and fires via Burp’s HTTP stack (shown in Logger/Repeater).

- Logic runs locally: the extension builds variants and exposes a tiny bridge (/v1/seed, /v1/send).

- Local model (Ollama or other approved runtime) or MCP client via the bridge supplies candidate inputs.

- Generated variants flow back through Burp, so logging, auth and scope rules apply.

Why this matters: The LLM never talks directly to the target. Burp remains the network control plane, so logging, proxying, auth and scopes stay intact.

Results so far

From representative staging runs:

- End-to-end (generate + send): ~11–20 seconds typical (versus ~3–4 minutes in the first prototype).

- Biggest wins: Parallel sending + stricter structured output format.

- Tester experience: Fewer context switches, faster iteration and clearer timing to spot slowness (model vs. network).

Note: Your mileage may vary depending on model, network and target.

Where AI helps — and where it doesn’t

Helps:

- Suggesting a few benign detection inputs quickly

- Covering boring variants and alternate encodings

- Moving “plausible” cases into Repeater for human investigation

Doesn’t replace:

- Understanding authorization flows, tenant isolation and data paths

- Choosing safe, business-relevant checks

- Turning a quirky response into a verified finding with evidence

We’re not trying to replace testers — we’re cutting keystrokes so testers can spend time on judgment and evidence-gathering.

Internal workflow

- Seed the request: In Repeater/Proxy history → right-click → “Use this request as seed.” The extension surfaces parameter names by location (URL/BODY/JSON/COOKIE) and cookies.

- Choose generation mode: Command mode for quick candidates; Prompt mode for targeted needs (e.g., “vary boolean and time-based checks”).

- Send & observe: Parallel requests fire through Burp; each result shows status, size and duration. Optionally add variants to Repeater for manual follow-up.

- Record findings: Log inputs, response evidence and timings to internal notes. If anything is interesting, write a reproducible proof and open a ticket.

Guardrails and governance

- Human in the loop: No autonomous crawling — a tester selects the seed and clicks Send.

- Authorized targets only: UI, defaults, and docs emphasize “lab/staging use only.”

- Local model by default: Use a local model or route MCP calls through the local bridge with optional bearer tokens.

- Observability: All traffic flows through BurpSuite and is visible in Logger.

What we didn’t automate (on purpose)

- Assertions and finding classification (human judgment still required)

- Advanced session/state management tricks beyond Burp’s normal flows

- Intrusive traffic outside the explicit tester’s action

Roadmap

Planned improvements:

- Auto-iterate: A loop that runs N rounds (or time-bounded): plan → send → observe → revise score deltas (status/latency/body/header), keeping top-K and tweaking strategy each round.

This is a human-started, scope-bound, benign probe whose execution stays in Burp while the model just proposes the next steps. - Playbooks: Repeatable sequences for common test families.

- Assertions library: Non-destructive checks and diffing to assist triage.

Getting started

- Repo: github.com/KaustubhRai/BurpSuite_LocalAI

- Build the JAR or install the release JAR in Burp → Extensions → Add.

- Local model path: Run Ollama (or an approved local runtime) and set Base URL/Model in the extension tab. Or enable MCP bridge and register the MCP server in your client (Claude Desktop/VS Code).

- Right-click a request → Use as seed → choose mode → Send.

- Watch Logger (Sources → Extensions) for traffic and timings.

Conclusion

This is an internal productivity tool for our security team — not a point-and-shoot scanner. Burp remains the single control plane: logging, proxying, auth and scoping stay exactly where they should. The extension speeds iteration so testers can focus on judgment, evidence and remediation.