Tired of data chaos? Try these big data integration tools

Struggling with scattered, unmanageable data? Big data integration tools help developers sync systems, automate workflows and gain valuable insights from real-time data without handling endless scripts or late-night fixes. Don’t let data chaos slow you down. Explore the most innovative tools for your team.

Highlights

You’ll learn about why you need data integration tools:

- Automated data processing: Developers no longer have to patch scripts or perform late-night debugging

- Real-time insights: Provides instant analytics, alerts and personalization

- Unified data flow: Combines structured and unstructured data across systems

- Scalability and flexibility: Handles high-volume, multi-source data with ease

- Error handling and data quality: Ensures clean, consistent, production-ready data

Many call big data growth an explosion. In 2024, the world generated 149 zettabytes of data, and this number is expected to reach 394 zettabytes by 2028. For developers, it means one thing: either live to work with it or get overwhelmed by it.

What’s worse is that the data isn’t sitting in a centralized system; instead, it’s scattered across:

- Cloud apps

- IoT devices

- On-premise systems

- Third-party APIs

Each place stores data in its format and structure and has a different update cycle. From product usage logs to customer interactions and supply chain analytics, the large volume and speed at which data is collected make manual processing challenging. The solution? Big data integration tools.

These tools take all the scattered, messy data and actually make it usable. They clean it up, send it where it's required and save you from writing scripts just to get two systems to talk or communicate. Developers can avoid waking up at 2 a.m. to rebuild broken pipelines or patch hundreds of scripts together. Whether you’re combining user behavior with transaction history or synchronizing product updates and real-time statistics, these tools offer useful insights.

In this post, we’ll break down what big data integration tools do, why they’re essential for your dev teams and how to choose one that has a positive impact on your business.

What are big data integration tools?

Big data integration tools connect your data across your entire tech stack. They gather data from several locations, like apps, databases, files and APIs, and upload it into one centralized repository. Your data reaches the right place in the right format at the time it's needed. Instead of patching hundreds of scripts during the process or carrying out manual exports between systems, big data integration automates your process.

Without integration tools, your developers manually collect data workflows from:

- User logs from the cloud

- Sales data buried in your CRM

Real-time telemetry and sensor data from IoT devices

- Marketing metrics from different APIs

A big data integration platform connects this chaos and builds automated flows, smart mappings and real-time syncs, without the developer having to debug or create custom code.

{{nativeAd:15}

But, doesn’t ETL do the same? Let’s understand how these tools are different from Extract, Transform, Load (ETL).

Big data integration tools vs ETL

The similarity between data integration tools and ETL stems from their shared function of transferring data to another location. They vary in how they handle scalability, speed and complexity. Here’s how the two are different from each other:

| Aspect | ETL | Big data integration tools |

| Use case | Batch data processing and scheduled jobs | Real-time, large-scale and multi-source data handling |

| Data types | Only structured data | Structured, semi-structured and unstructured data |

| Processing style | Batch (daily, weekly, etc.) | Real-time |

| Flexibility | Rigid workflows, which are harder to adapt | Flexible and supports modern and legacy systems |

| Speed | Slow (scheduled intervals) | Fast, continuous data movement |

| Scalability | Limited by traditional systems | Built for high-volume, high-velocity environments |

| Maintenance | Often needs manual fixes and scripting | Typically comes with automated error handling and logging |

| Developer workload | Custom scripts, frequent debugging | Automated data integration process with minimal coding |

| Best for | Reporting and analytics on historical data | Real-time dashboards, modern data stacks and large-scale operations |

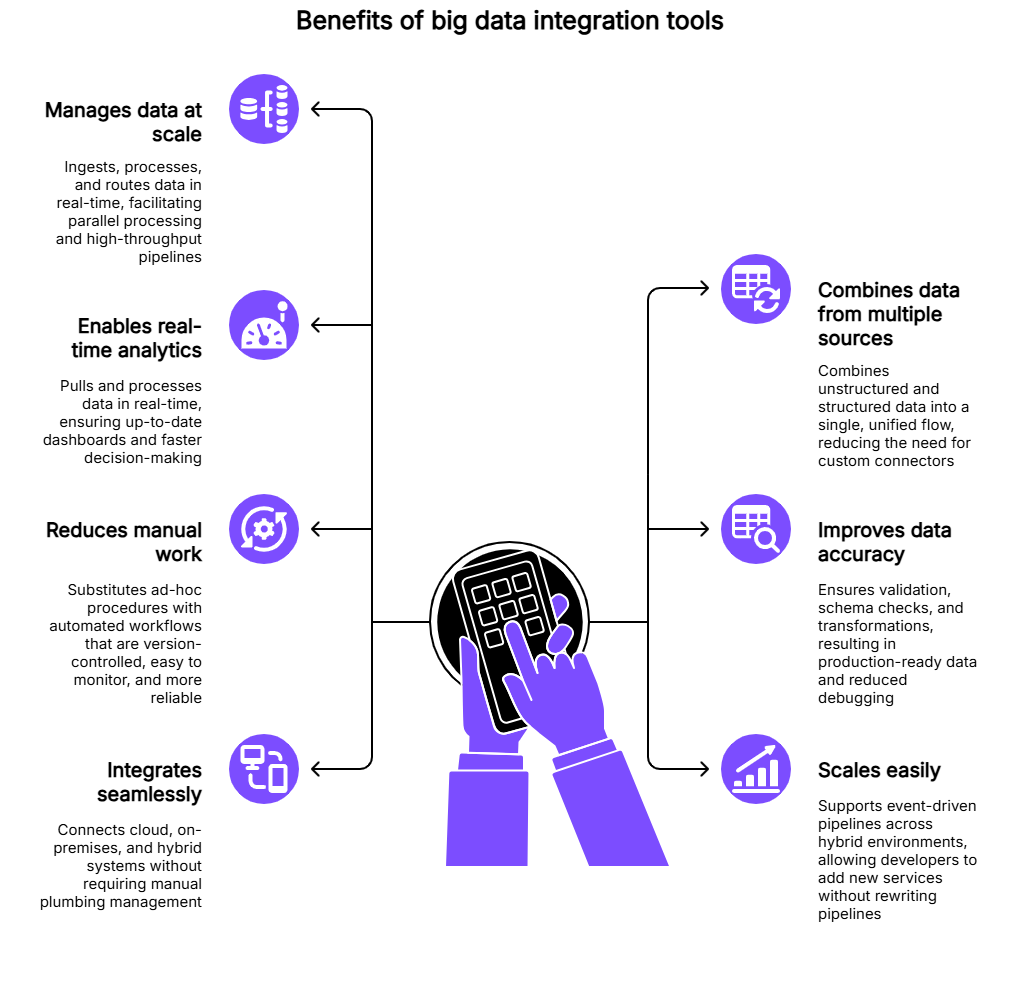

Benefits of big data integration platforms

Manages data at scale

Your company generates vast amounts of diverse data from user activities, such as system logs and user product interactions. These integration platforms can ingest data, process and route it in real-time. It facilitates parallel processing, retry logic and high-throughput pipelines to ensure your developers work with functional pipelines.

For instance, a fintech firm that gathers transactional data, fraud warning and customer behavior data from multiple regions cannot depend on brittle scripts. These integration tools empower developers to create a high-throughput pipeline. This delivers information without delay or data loss to the correct system (e.g., a data warehouse) even when website traffic jumps.

Combines data from multiple sources

Data resides in silos and is available in your CRMs, CDPs, data analytics platforms, databases, event streams and third-party APIs. Each place stores data with a different schema and synchronization requirements. Data integration tools combine unstructured and structured data into a single, unified flow. This means that the number of custom and data connectors developers need to maintain will decrease, and they don’t have to write code every time a new system is added.

For instance, a developer builds a dashboard that combines lead activity in a CRM, payment history in your SQL database and behavioral data in an in-app event stream. They can avoid writing three scripts. A big data integration tool maps the sources and automatically synchronizes them.

Enables real-time analytics and faster decision-making

Big data integration tools pull and process data in real-time. Your dev team doesn’t have to wait to get batch updates. This means that your dashboards, alerts and apps will always be up-to-date and teams can take instant action on ongoing developments. Developers can easily detect problems and trends early and create systems that are responsive to new data.

For example, when your developers work on an online store and they wish to measure cart abandonment, a data pipeline can feed this event on a real-time basis to your analytical tool. The dev team can invoke a push notification (email reminder) or update a live dashboard in real-time, eliminating the need to wait hours for a data update.

Improves data accuracy, quality and consistency

Bad data breaks your workflows and processes. Duplicates, nulls or format mismatches create downstream bugs that are challenging to track even for the most experienced developer. Integration tools ensure data integrity through validation, schema checks and transformations, so the data reaching your warehouse is production-ready. Your developers avoid late-night debugging and wasting time fixing broken workflows.

For instance, a health-tech app collecting patient metrics from wearables gets data in different formats depending on the device. Integration tools can standardize this to ensure consistent records in the central repository.

Reduces manual work and operational errors

Manually exporting data or writing quick one-off scripts gets the job done at first. But over time, they become a headache. It’s challenging for developers to test, and it cannot scale as your data increases. These data tools substitute such ad-hoc procedures with automated workflows that are version-controlled, easy to monitor and more reliable.

For instance, a SaaS team synchronizes app usage data into the billing platform. After switching to an integration tool, the process became automated and easier to monitor. Developers can avoid debugging scripts during a release window.

Scales easily with growing data volumes

For developers, controlling the data flow is relatively easy at the initial stage of system development. However, as the system becomes more complex, maintaining the data flow becomes increasingly challenging. You either deploy microservices, spin up new cloud-based instruments or begin to gather data using various devices. All this becomes complicated in a short time.

Data integration tools support event-driven pipelines across hybrid environments and work well in containerized settings. Developers can add new services or data sources without rewriting their pipeline.

Integrates seamlessly with cloud, on-premises and hybrid systems

Modern environments are rarely all-in on one setup. You store user events in a cloud data warehouse, run critical services on on-premise servers and pull partner data from a hybrid setup. A big data integration tool connects them.

For instance, if your team pulls user behavior from a cloud-based analytics tool, processes sensitive transactions in an on-premise database and runs ML models in a hybrid GPU cluster, an integration tool keeps that entire workflow together and in sync. This happens without developers writing custom SSH tunnels or worrying about firewall configurations.

Best big data integration tools

Apache NiFi

Apache NiFi is a real-time, open-source tool used primarily for building flow-based data pipelines. The drag-and-drop UI, built-in processors and superior back-pressure handling are suited for cloud data movements and monitoring.

Talend

Talend is a leading big data integration tool that supports batch and real-time data jobs. It connects with tools such as Hadoop, Spark and cloud data lakes. This helps you build scalable ETL or ELT (extract, load, transform) workflows.

Informatica

This enterprise software is famous for its reliability, metadata management and compliance. It’s often used in high-governance environments and supports a wide range of data sources and scheduling needs.

Microsoft Azure Data Factory

The Azure Data Factory is a cloud-native service for building and orchestrating workflows within the Azure ecosystem. The tool offers both low-code and code-based development features for hybrid and scalable data integrations.

Key capabilities to look for in big data integration tools

The right data integration tools come with the following capabilities:

Real-time and batch processing

Developers need both. Real-time streams (e.g., Kafka, Kinesis) handle behavioral data, fraud alerts and personalization. Batch jobs (e.g., Spark, Airflow) are better for analytics, reporting and historical data syncs. A solid big data integration tool supports both processing types in a unified way. Developers no longer juggle between separate platforms to move data across their pipeline.

Cloud-native and hybrid compatibility

Integration tools should work with cloud providers (AWS, GCP and Azure) and on-premises tools. Your developers require container and Kubernetes compatibility for flexibility in deployment. Choose a platform offering built-in support for standard formats like S3, Parquet and Delta Lake.

ETL/ELT flexibility

The flexibility of ETL is essential for big data integration tools because the data comes from diverse sources and depends on the business demands. Some circumstances require traditional batch processing, while others require a better approach using ELT. When your integration tools are flexible, developers can choose whichever workflow fits their current challenge. This flexibility helps you handle growing and changing data streams, optimize performance and costs, integrate new systems more easily and adapt quickly when requirements shift.

Team expertise

Some teams prefer writing SQL or Python, while others benefit from visual interfaces. The best tools offer both options. A rich UI can help analysts and operations teams move faster, while SDKs and APIs allow developers to build custom pipelines.

Security and data quality

You need a secure platform offering features like role-based access control, data encryption and activity logging to protect your sensitive information. Data integration tools with schema validation, anomaly detection and error handling are critical to maintain trust and accuracy across pipelines.

Scalability and governance

The integration tools should be scalable to manage the rising volume of information and complex applications. Tools that support distributed processing allow your developers to manage fault-tolerance logic and large workloads to keep pipelines flowing. Your team needs tools that provide governance features such as data lineage to understand the data origin, metadata catalogs to categorize the data and version control to maintain the changes within pipelines.

Cost and integration

The cost of these integration tools differs based on how they're built. Some charge based on the amount of data you move, others charge per connector and some bill you for resources used. If your data jobs run occasionally, a serverless model can be more cost-effective. However, a dedicated cluster is more suitable and performs better with continuous workloads. Regardless of the model that you pick, the tool pairs with CI/CD and Apache Airflow pipelines. This ensures the tool fits into your current development and deployment processes.

Role of Contentstack in big data integration

Connects content and data using robust APIs

Contentstack supports data integration through documented and automated APIs. The APIs work on your behalf. They automatically forward your structured data to your data warehouses, front-end applications and other connected systems. You no longer worry about any errors or time-consuming manual synchronization. Synchronization happens in real-time, regardless of whether you work in the cloud, on-premises or a hybrid environment. Furthermore, Contentstack Data Activation Layer (DAL) supports both real-time and batch data flows. As a result, the data is in the right place and at the right time. That way, your tools and teams are always using the same data.

Integrates with cloud data warehouses

Contentstack integrates with AWS, Google Cloud and Azure. Using the platform, developers send structured content into S3, BigQuery or Azure Data Lake so that you do not have to re-engineer your infrastructure.

Supports real-time and batch data flows

Contentstack DAL gathers, processes and streams behavioural and engagement data from your content layer. You can dump data to downstream pipelines in real time or use it to trigger personalization or machine learning workflows.

Ensure data exchange across systems and tools

DAL simplifies integration between Contentstack and your broader data ecosystem. It supports bi-directional data exchange across customer data platforms, cloud storage, product databases and CRM systems. This ensures your content and data stay in sync.

Works with BI tools to deliver insights from structured content

If you need structured content in Looker, Power BI or Tableau, DAL makes it easier to feed clean, consistent content and engagement data directly into your analytics layer. This helps you track performance, measure engagement and guide strategy.

Provides enterprise-grade scalability and flexibility

Contentstack’s DAL connects your CMS to big data sources in real time. It pulls real-time data insights from platforms like Snowflake or Segment directly into content workflows. This lets you deliver personalized, data-driven experiences instantly across channels. With DAL, your content performs at scale.

Case study: BOOST drives fan engagement with data-powered Contentstack

BOOST used Contentstack to streamline and automate its data pipeline, turning vast, complex fan data into actionable insights. By integrating Contentstack into its stack, BOOST enabled clients like the Big Ten Conference to organize, publish and personalize content based on real-time data. This data-driven approach improved fan engagement and also opened new opportunities for revenue generation, personalization and analytics-driven decision-making across 28 sports and 18 schools.

After using Contentstack, Tim Mitchell, Chief Commercial Officer at BOOST, said:

“Contentstack is not only going to enable us to elevate the fan experience through the Big Ten and other conferences, it’s also going to more seamlessly integrate revenue generation vehicles through merchandising, advertising and sponsorship.”

Read the complete case study here.

{{nativeAd:16}

Built for composable architecture and hybrid environments

Contentstack has a modular, API-first architecture, which grows with your enterprise. DAL provides secure, regulated access to data, improves content-driven decision making and minimizes operational challenges by combining structured data with the back-end system.

Supports secure, governed access to large datasets

Enterprise-grade governance controls ensure secure access to content and data pipelines. This is important for managing sensitive data across multiple teams or regions.

Speeds up content-driven decision-making

Contentstack connects with your data pipelines and business intelligence (BI) tools, offering real-time insights about user behavior, campaign effectiveness and product performance. You can use this information to power your content processes. Also, using real-time data marketing, product and development teams can make the right decisions.

FAQs

What are data integration tools?

Data integration tools are powerful platforms that bring together data from different places, like APIs, databases or files, and combine them into one central spot. They automate the cleaning of data, making sure workflows are smooth and accurate.

What is big data integration?

Big data integration involves bringing together and processing vast amounts of structured and unstructured data from various sources. These platforms collect, clean, blend and prepare the data for businesses to quickly run analytics, apply machine learning and make smarter decisions at scale.

What are the best data integration tools?

The best data integration tools are Apache NiFi, Talend and Informatica. You can choose any depending on your data volume, team expertise, infrastructure and integration needs.

Is big data an ETL tool?

No, big data is not an ETL tool. It’s a massive dataset that your company generates for analysis. On the other hand, ETL tools extract, transform and load data into systems for processing and insights.

Learn more

The volume of data is increasing with every passing day. Modern businesses are left with no choice but to bring the data together and use it in functional ways. The big data integration tools eliminate complexity and manual efforts. This allows developers to focus on innovation, receive real-time feedback and generate profits, rather than spending time fixing broken workflows or data pipelines. If you are prepared to address your data difficulties with a versatile and dependable platform, Contentstack’s APIs and DAL make integrating content and data easier. Talk to us to understand how the platform enables you to work smarter and more efficiently through a competitive intelligence-guided decision-making process at both the team and business process levels.

About Contentstack

The Contentstack team comprises highly skilled professionals specializing in product marketing, customer acquisition and retention, and digital marketing strategy. With extensive experience holding senior positions at renowned technology companies across Fortune 500, mid-size, and start-up sectors, our team offers impactful solutions based on diverse backgrounds and extensive industry knowledge.

Contentstack is on a mission to deliver the world’s best digital experiences through a fusion of cutting-edge content management, customer data, personalization, and AI technology. Iconic brands, such as AirFrance KLM, ASICS, Burberry, Mattel, Mitsubishi, and Walmart, depend on the platform to rise above the noise in today's crowded digital markets and gain their competitive edge.

In January 2025, Contentstack proudly secured its first-ever position as a Visionary in the 2025 Gartner® Magic Quadrant™ for Digital Experience Platforms (DXP). Further solidifying its prominent standing, Contentstack was recognized as a Leader in the Forrester Research, Inc. March 2025 report, “The Forrester Wave™: Content Management Systems (CMS), Q1 2025.” Contentstack was the only pure headless provider named as a Leader in the report, which evaluated 13 top CMS providers on 19 criteria for current offering and strategy.

Follow Contentstack on LinkedIn.

.svg?format=pjpg&auto=webp)

.svg?format=pjpg&auto=webp)

.png?format=pjpg&auto=webp)

.png?format=pjpg&auto=webp)